User:ScotXW/Graphical control element

A graphical control element

- is an element of interaction, such as a button or a scroll bar, that is part of the graphical user interface (GUI) of a program.

- is a subroutine written in some programming language such as C, C++, Objective-C, etc.). It has to be compiled to become executable. Also, there has to be a rendering engine, which generates the graphical representation. The graphical representation can be hard-coded or themable.

Utilization[edit]

Libraries containing a collection of graphical control elements are e.g. GTK+, FLTK, wxWidgets, Qt, Cocoa, etc.

Any program with a GUI can be described as consisting out of the program's core logic, and it's GUI. Hence the same core logic can be paired with different GUIs, using different libraries targeting different peripherals. Sometimes the core logic is called the back-end and the GUI is called the front-end. E.g. the Transmission BitTorrent client has multiple GUIs written for it. It is a bad example, since both the GTK+ and the Qt front-end target computer keyboard and computer mouse as input peripherals.

Graphical user interface builders, such as e.g. Glade Interface Designer or GNOME Builder facilitate the authoring of GUIs in a WYSIWYG manner employing a user interface markup language such as in this case GtkBuilder.

GUI design[edit]

When implementing the WIMP paradigm.

The GUI of a program consists of numeral graphical control elements. The authoritative thing dictating the design of the GUI of any program is the "workflow" as researched by cognitive ergonomics or as reported by an active user population. To be effective, any GUI has to be tailored to the input and output hardware:

|

A very common and misleading visualization of the human–machine interaction: the user "sits on top" of the GUI. |

|

A correct visualization of the human–machine interaction: the users "sits below" the hardware. The user interacts directly with the hardware, not with the GUI! Any input and output traverses the kernel and is computed on the CPU. But the human user interacts directly only the available peripheral hardware! |

|

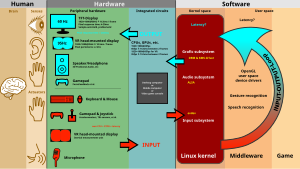

A complete and correct visualization of the human–machine interaction. The human brain is on the far left side, the GUI is on the far right side. A couple of layers do sit in between! The GUI design has to take into consideration the cognitive abilities of the human brain to enable an efficient work flow for any interaction as well as the restrains of the employed peripheral hardware being used. The peripheral hardware is usually tailored to the features of the human (10 fingers, etc.) Output: In case a visual device is even used, this display has a certain size, a certain resolution (raster graphics) and refresh rate. Input: May be a combination of a computer keyboard and some pointing device. Or it may be solely a touchscreen. There could be gesture recognition, voice command recognition. Employment of a screen (display/monitor) does not automatically result in "graphics", but could display merely a Text-based user interface (TUI, cf. ncurses). A TUI can have symbols different than just letters, cf. character encoding. Latency is the delay between input and resulting output. In case this I/O latency becomes to big, it messes with the workflow. |

|

Visualization of the human–machine interaction. Any input and output traverses the kernel. |

|

WIMP with X. |